Ethics, AI & Health: What Are We Talking About? Key Insights from the Conference

Artificial intelligence is profoundly transforming the healthcare sector, opening up unprecedented opportunities in diagnosis, treatment, and prevention. But this technological revolution also raises major questions, notably around ethics, personal data protection, and regulatory frameworks.

Can we innovate without compromising ethics? Can we regulate without stifling innovation? These questions guided the entire conference organized at Future4care with our partner TNP Consultants, around a key topic: artificial intelligence in healthcare, and the conditions for its ethical and responsible deployment.

Ethics, regulation, responsibility: laying the foundations

Talking about ethics is not only talking about law. It is about universal principles: beneficence, justice, autonomy, transparency, which must guide the use of health technologies. And in the case of AI, the stakes are real: avoiding biases, ensuring transparency, maintaining a central role for humans.

Secondary data: should barriers be lifted?

Ségolène Aymé (INSERM), Léa Rizzuto (Health Data Hub), and Frédéric Lesaulnier (Brain Institute) opened the debate on a sensitive subject: the use of secondary health data. While their potential is enormous for research, their exploitation remains hindered by regulations sometimes considered too rigid, especially in France.

Yet, all agree: the benefit-risk balance favors their use, provided that the protection of primary data is impeccable. There are cultural blockages, institutional resistance, but above all a call to move towards more harmonized governance at the European level.

AI and regulation: understanding risks to better control them

Florence Bonnet presented the main principles of the regulatory framework governing AI and health data. She reminded that risk management is at the heart of recent texts such as the European AI Act, with a structured approach aimed at anticipating risks related to discrimination, privacy, and data manipulation. Special vigilance is required across the entire AI system value chain.

A trusted AI? Yes, but under what conditions?

Acceptability, explainability, interoperability… Keywords abound, but the ideas are precise. Sarah Amrani, Clément Gakuba, Thierry Dart, Eric Théa, and Florence Tagger agree: for AI to be adopted, it must be understood, controlled, and secure.

System interoperability emerges as a fundamental issue: no AI without data, and no data without collaboration. AI must also be accompanied by training, both for healthcare professionals and patients, and include real human governance. The goal is not to replace, but to strengthen human action.

What we learn from the field

The conference ended with the exploration of concrete cases: Jennifer Gangnard, Franck Guillerot, and Malo Louvigné shared their experiences, from virtual reality to medical knowledge transfer. These are already established uses that show AI in healthcare is no longer a distant prospect but a reality demanding lucidity and responsibility.

What's next ?

What this conference highlighted is that it is not enough to talk about AI: we must talk about how we design it, how we integrate it, and with whom we build it. Far from theoretical discourse, the exchanges helped anchor reflection in reality, between tensions, demands, and shared ambitions.

And it is precisely here that Future4care wants to continue positioning itself: at the crossroads of debate, practice, and innovation.

You may also like

Three Fundraising Announcements from Future4care Ecosystem Startups in September

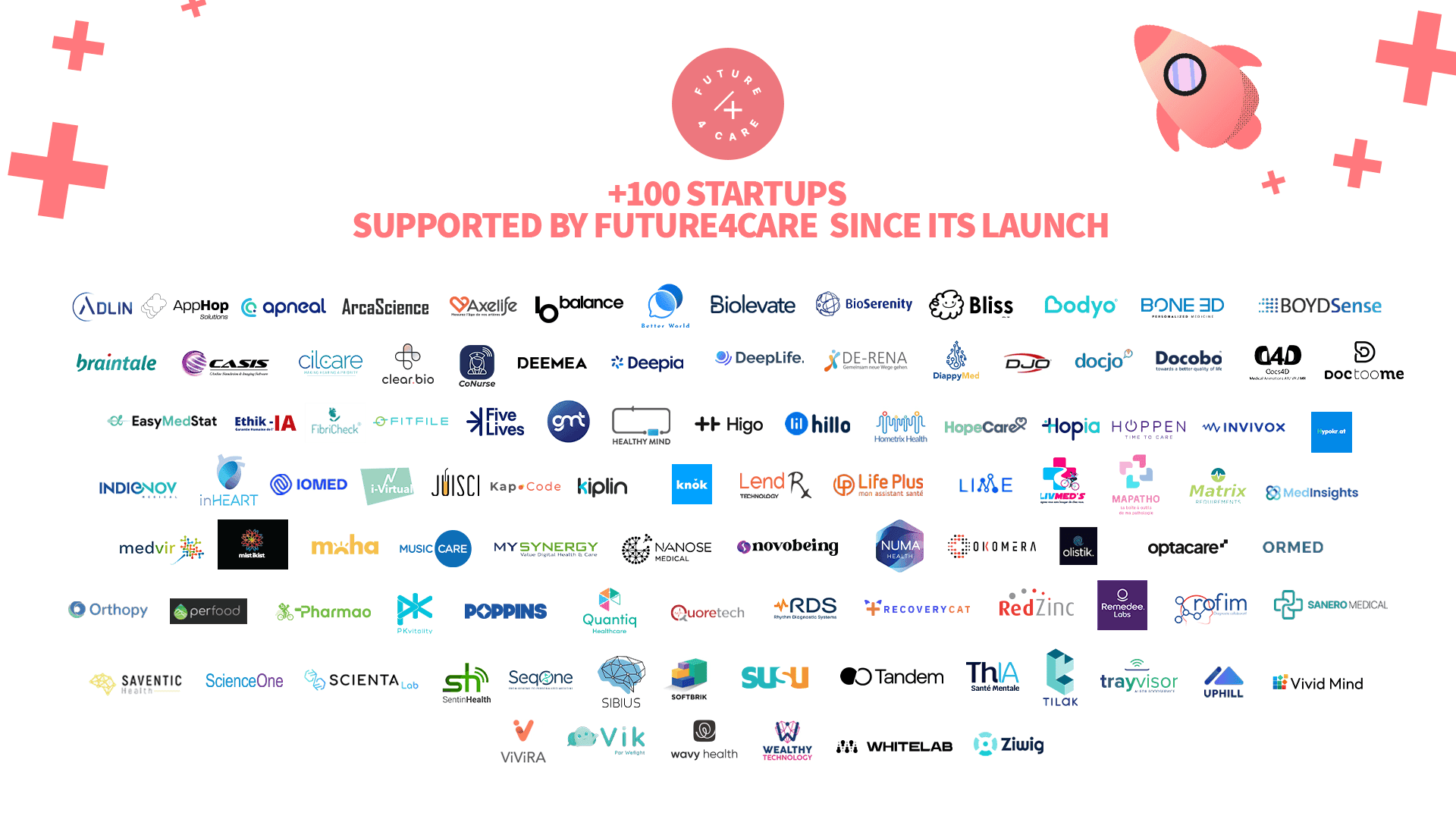

This year, Future4care celebrates a milestone: 100 startups supported on their journey to shaping the future of health.

Highlights from the Health Insurance and Social Protection Masterclass by TNP Consultants"